1. Scope

Problem Statement:

Software often works perfectly in a developer environment but fails in others due to different configurations, missing dependencies, or incompatible systems — famously summarized as the “It works on my machine” problem.

This write‑up walks through how I tackled that problem using Docker, deploying a production‑ready app with Amazon ECS, while using Terraform for infrastructure and GitHub Actions for CI/CD. The objective: ensure consistency, scalability, and automation across all stages of app delivery.

2. Breakdown of the Deployment Journey

2.1 Informal Description

Imagine building a machine that only runs in your garage, not anywhere else. Docker solves this by packaging applications into containers — portable, self‑contained units that run identically in any environment.

2.2 What is Docker?

Docker is a platform that allows developers to:

- Run them in isolated environments – Containers provide isolated environments for each application, preventing conflicts between different software stacks. This isolation improves security and makes testing and debugging easier.

- Package applications along with dependencies into containers – Containers bundle an application with all its required libraries, dependencies, and configurations into a single unit. This ensures the application runs consistently across different systems.

- Deploy them anywhere without compatibility issues– Since containers include everything the app needs to run, they can be deployed on any system with a container runtime, like Docker, without worrying about OS or environment compatibility.

Why it matters:

It eliminates “works on my machine” problems by ensuring consistent behavior across dev, test, and prod environments.

2.3 Real‑World Case

Goals:

- Uniform behavior across all environments – Applications behave consistently across development, testing, and production environments, reducing bugs caused by environmental differences. This ensures predictable outcomes and simplifies troubleshooting.

- Fast, repeatable deployments – Automated deployment processes allow applications to be deployed quickly and reliably with minimal manual intervention. This boosts development speed and reduces the risk of human error.

- Easy scaling as usage grows – The system can handle increased user demand by adding resources or instances without major changes. This ensures performance and availability as your application grows.

3. Technical Implementation

3.1 Step 1: Containerizing the App

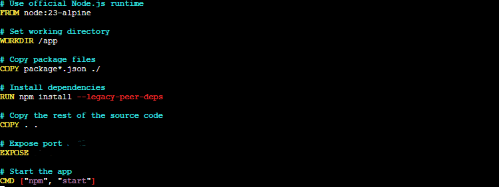

Dockerfile Highlights:

- Base image: Node.js – The Dockerfile uses a Node.js image as the base, providing a pre-configured environment with Node.js and npm installed.

- Copies source code – The application’s source code is copied from your local machine into the Docker image, typically into the working directory defined in the container.

- Installs dependencies and runs server – The Dockerfile installs required npm packages using npm install and then starts the application server using a command like npm start or node server.js.

Example:

docker build -t your-app.

docker run -d -p <PORT>:<PORT> your-app (to run in detach-mode) 3.2 Step 2: Deploying to the Cloud with ECS + EC2

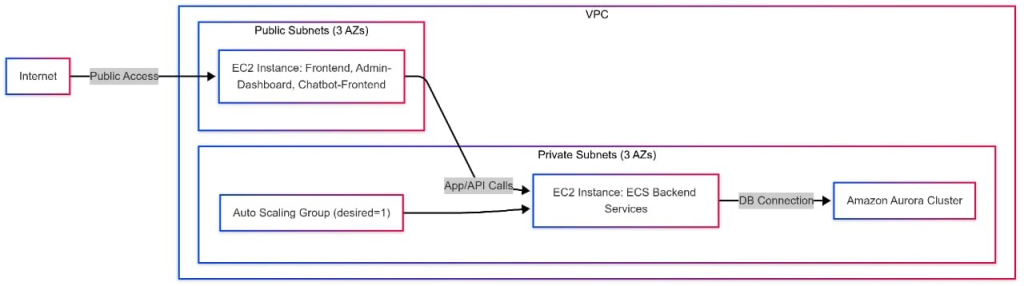

ECS (Elastic Container Service) schedules and runs containers on virtual machines.

Setup Summary:

- Microservices as separate containerized tasks

- EC2 launch type (manual VM management)

3.3 Image Management

Docker images pushed to Amazon Elastic Container Registry (ECR), a secure and private image storage.

3.4 Infrastructure as Code (IaC) with Terraform

Automated provisioning of:

- ECS cluster + EC2 instances – An ECS (Elastic Container Service) cluster is a logical grouping of EC2 instances used to run containerized applications. These EC2 instances act as the compute capacity where your containers are deployed and managed.

- Launch configurations and Auto Scaling – Launch configurations define the instance settings (AMI, instance type, etc.) used by Auto Scaling Groups. Auto Scaling automatically adjusts the number of EC2 instances based on demand to ensure high availability and cost-efficiency.

- IAM roles and permissions – IAM (Identity and Access Management) roles assign specific permissions to AWS services or users, enabling secure access control. ECS tasks and EC2 instances use IAM roles to interact with AWS resources securely.

3.5 Task Definitions

Each microservice had a task definition specifying:

Docker image

- CPU & Memory – CPU and memory refer to the computing power and RAM allocated to a container or service. These resources determine how much processing and temporary storage your application can use while running.

- Port Mappings – Port mappings connect a container’s internal ports to ports on the host machine. This allows external systems or users to access the containerized application via specific network ports.

3.6 ECS Services

Created for each task to:

- Keep microservices running – Ensures all microservices stay continuously available by automatically monitoring their health and restarting them if they stop or crash. This helps maintain application stability and uptime.

- Auto‑replace failed containers – Automatically detects failed or unhealthy containers and replaces them with new instances. This minimizes downtime and keeps the service running smoothly without manual intervention

3.7 Networking and Load Balancing

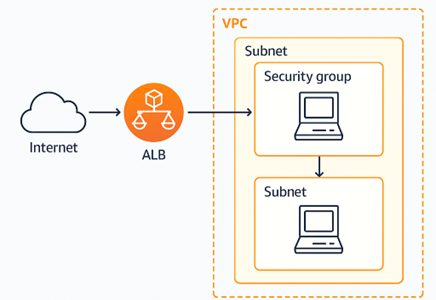

Using AWS VPC features:

- VPC, subnets, security groups – A VPC is a logically isolated network in AWS where you can launch resources. Subnets divide the VPC into smaller IP ranges across different Availability Zones. Security Groups act as virtual firewalls that control inbound and outbound traffic to AWS resources.

- Application Load Balancer (ALB) for traffic routing, health checks, and domain‑based access – An ALB automatically distributes incoming traffic across multiple targets like EC2 instances, ensuring high availability. It performs health checks to route traffic only to healthy instances. ALB also supports host-based and path-based routing, enabling domain-specific access to services.

3.8 CI/CD with GitHub Actions

Pipeline Flow :

- Code pushed

- Docker image built

- Image pushed to ECR

- ECS service updated with new image with force new deployment

This reduced manual effort and ensured faster, reliable deployments.

4. Docker Power Tips

4.1 Common Commands

| Command | Purpose |

| docker stats | Monitor container resources |

| docker logs <id> | View logs |

| docker exec -it <id> sh | Open shell inside container |

| docker rm -f <id> | Force remove container |

| docker rmi <image> | Remove image |

| docker system prune -a | Clean unused data |

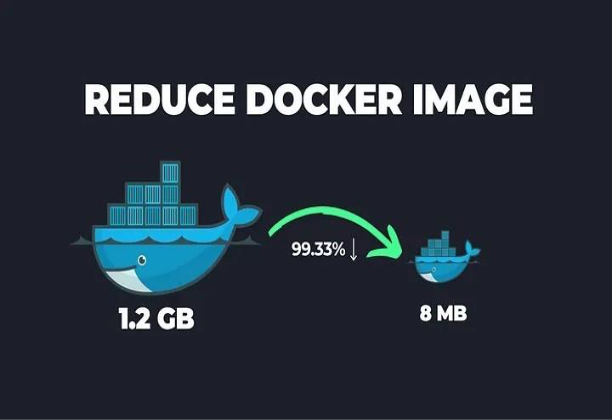

4.2 Shrinking Image Sizes by 99%

- Use minimal base images (e.g., Alpine) – Minimal base images like Alpine reduce the image size and attack surface, making containers faster to pull and more secure. They include only essential libraries and tools, improving performance and efficiency.

- Multi‑stage builds – multi-stage builds allow you to separate the build and runtime environments in a Dockerfile. This helps in creating cleaner and smaller final images by copying only the necessary artifacts from the build stage.

- Clean up unnecessary files – Removing temporary files, caches, and build dependencies from the image reduces its size and potential vulnerabilities. This leads to faster deployment times and less storage consumption.

4.3 Dockerfile Best Practices

- Use .dockerignore – The .dockerignore file excludes unnecessary files (like node_modules, .git, etc.) from being copied into the Docker image, reducing build context size and improving performance.

- Combine similar commands – Combining commands in a single RUN instruction (e.g., RUN apt-get update && apt-get install -y …) reduces the number of layers in the image, resulting in smaller and more efficient Docker images.

- Use multi‑stage builds – Multi-stage builds separate the build environment from the final image, allowing you to copy only the necessary artifacts into the final lightweight production image, enhancing security and reducing size.

- Pin dependency versions – Pinning versions (e.g., node:22) ensures consistent builds and avoids unexpected issues caused by newer versions of dependencies or base images.

4.4 Volume Mounting for Development

Mount code directly for-

- Live reload – Automatically refreshes the application when code changes are detected, allowing developers to instantly see updates without restarting the server or rebuilding the app manually.

- Faster dev loop – Speeds up the code-test-debug cycle by reducing build and deployment time, enabling quicker feedback and improving developer productivity.

- Realistic testing in container – Allows testing in an environment that closely mirrors production, using containers to ensure consistent behavior across different stages of development and deployment.

4.5 Service‑to‑Service Networking

- Access containers by service name – When using user-defined bridge or overlay networks, containers can resolve each other using their service names, simplifying inter-container communication without needing IPs.

- Use Docker bridge networks – Bridge networks create an isolated internal network where containers can communicate securely. It helps manage container communication without exposing services externally.

- Avoid unnecessary port exposure – Only expose ports that need external access to reduce the attack surface. Internal services should communicate through the Docker network without publishing their ports to the host.

4.6 Docker Compose for Local Testing

- Start all services with docker‑compose up – Use the docker-compose up command to launch and run all defined services in the docker-compose.yml file. It builds images (if not already built) and starts the containers together, making multi-container setups easy to manage.

- Define service dependencies – Service dependencies can be defined using the depends_on key in docker-compose.yml, which ensures that a service starts only after its dependencies have been started, though it doesn’t wait for them to be “ready.”

- Share environment configs – Environment variables and configurations can be shared across services using .env files or the environment section in docker-compose.yml, promoting consistency and easier configuration management across environments.

5. Final Thoughts

- Docker helped achieve that through containerization.

- ECS made production deployment scalable and resilient.

- Terraform automated infrastructure.

- GitHub Actions enabled seamless CI/CD.

Together, they empowered a single developer to deploy like an enterprise.

6. Glossary of Terms

- Docker: A platform for containerizing applications with dependencies

- Container: A lightweight, portable package of software and its environment

- ECS: Amazon’s Elastic Container Service for running Docker containers

- EC2: AWS virtual machines used to run container tasks in ECS

- ECR: Elastic Container Registry — AWS’s Docker image repository

- Terraform: Infrastructure‑as‑Code tool used to define and manage AWS resources

- Task Definition: ECS blueprint defining container properties and configurations

- ALB: Application Load Balancer — routes traffic and checks health

- CI/CD: Continuous Integration/Deployment pipelines for automated software updates

- GitHub Actions: GitHub’s automation tool for CI/CD workflows

7. References

- AWS ECS Documentation – https://docs.aws.amazon.com/ecs/

- Docker Documentation – https://docs.docker.com/

- Terraform AWS Provider Docs – https://registry.terraform.io/providers/hashicorp/aws/latest/docs

- GitHub Actions Documentation – https://docs.github.com/en/actions

Read the next Knowledge Bank post on “Troubleshooting of Microservices on Amazon ECS” to tackle real-world deployment challenges using ECS (EC2), Terraform, and GitHub Actions.