1. Introduction

This document provides a comprehensive guide for building Retrieval-Augmented Generation (RAG) applications with Amazon Bedrock Knowledge Bases, along with essential Generative AI concepts.

It is intended for AI engineers, architects, product managers, and enterprise teams seeking to leverage Generative AI for accurate, scalable, and secure solutions.

2. Generative AI Overview

Generative AI refers to AI systems capable of creating new content in text, code, image, audio, and video formats. It uses advanced models trained on vast datasets to identify patterns and generate human-like outputs.

Core capabilities include:

- Text generation (chatbots, summarization, translation)

- Code generation (assistants like Copilot)

- Image and video generation

- Speech synthesis and audio enhancement

- Data-to-text narration for insights

Business applications span multiple industries, including automated reporting, customer support chatbots, marketing content generation, and intelligent analytics.

3. Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) is a hybrid AI technique that combines information retrieval from external data sources with text generation from a Large Language Model (LLM).

Unlike standard LLMs that rely solely on their training data, RAG fetches up-to-date, domain-specific information and injects it into the prompt before generating the final answer.

Why RAG is Important

- Accuracy – Reduces hallucinations by grounding responses in real data.

- Freshness – Retrieves the most current information without retraining the model.

- Domain Specialization – Incorporates internal, proprietary, or regulated data sources.

- Multi-Modal Capability – Can work with text, structured tables, and images.

Core Workflow

- Retrieve relevant context from external sources based on the user query.

- Merge retrieved data with the query and generate an informed response using an LLM.

- Post-process the output to add citations, apply formatting, or enforce domain-specific rules.

4. Amazon Bedrock Knowledge Bases – Core Features

- Fully managed RAG workflow (ingestion, retrieval, augmentation)

- Multi-modal data support

- Built-in session memory for multi-turn conversations

- Automatic citations for transparency

- Enterprise-grade security and compliance features

5. Best Practices & Scalable RAG Methodology

Core Best Practices

- Use clear, structured prompts with few-shot examples to guide model responses.

- Chunk data effectively for efficient retrieval.

- Combine semantic and keyword-based search for optimal coverage.

- Apply content moderation filters to ensure safe and compliant outputs.

- Select models considering latency, cost, and accuracy trade-offs.

Scalable Implementation Steps

- Begin with a small dataset to validate the end-to-end RAG pipeline.

- Ingest and preprocess data with effective chunking and metadata tagging.

- Choose the right foundation model and vector store for your performance needs.

- Automate ingestion and retrieval processes with robust infrastructure.

- Deploy, monitor performance, and iteratively improve based on user feedback.

7. Enverus Case Study

Enverus built ‘Instant Analyst’ using Bedrock Knowledge Bases to serve energy sector clients with actionable intelligence from proprietary datasets.

- Integrated multi-modal retrieval for text and tabular data

- Leveraged SQL-based structured data retrieval for precision analytics

- Automated ingestion and processing pipelines using AWS services

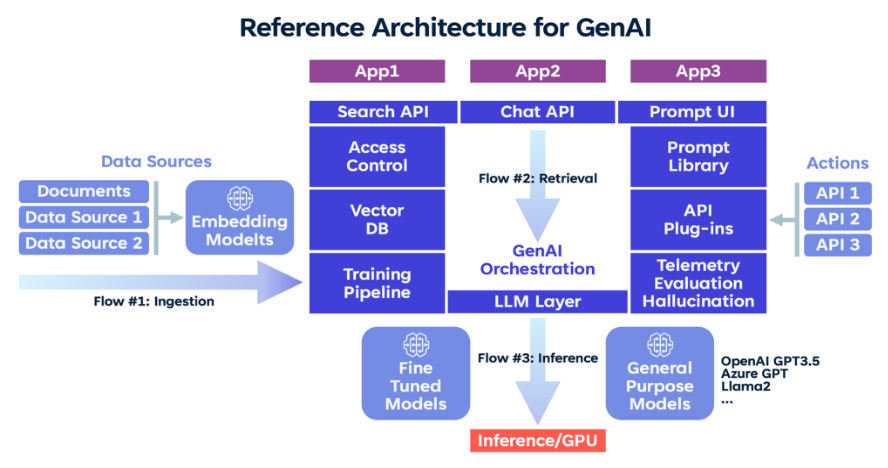

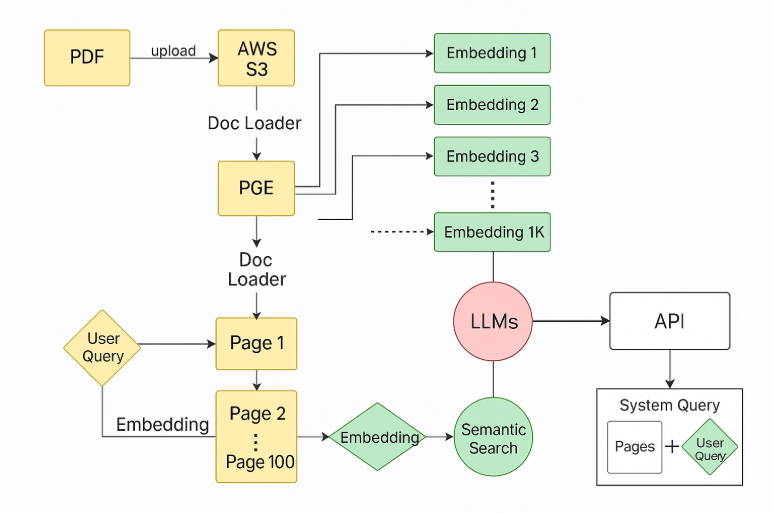

8. Architectures

Data Ingestion Pipeline: Amazon S3, AWS Lambda, Amazon SQS, Amazon DynamoDB, AWS Textract, Amazon Bedrock

RAG Solution Architecture: Amazon Aurora, OpenSearch, EKS, SageMaker, Bedrock Knowledge Bases

These architectures enable scalable, reliable, and low-latency retrieval and generation workflows.

9. Advanced Generative AI Features in Bedrock

- GraphRAG for linking entities in knowledge graphs – Enhances retrieval by connecting related concepts and relationships for deeper insights.

- Streaming responses for real-time conversational experiences – Delivers partial results instantly for faster, interactive chats.

- Multi-modal retrieval combining documents, images, and structured data – Expands capability to fetch and integrate varied data formats into a single response.

10. Security & Governance

- Data Protection & Compliance – Ensures encryption at rest and in transit, regional data residency compliance, and automated redaction of sensitive information to meet privacy regulations.

- Access Control & Accountability – Implements IAM-based access policies with detailed audit logging and traceability for secure, controlled, and trackable data usage.

11. Key Takeaways

- Combining RAG with Generative AI delivers accurate, context-rich answers

- Bedrock Knowledge Bases remove infrastructure management complexity

- Multi-modal and structured data support expands AI’s business use cases

- Continuous monitoring, prompt engineering, and data strategy are critical for success

Illustrations

Below is workflow representing Generative AI: